How to verify your Stylus contracts with the Blockscout API, Multiple contracts in a Stylus project, Supporting Stylus on Ethereum RISC-V (part 2)

1st of August 2025. Developer guides, and continuing the Stylus on RISC-V exercise.

Hello everyone! Bayge here (Farcaster)! We’re back. In today’s post, we’ll cover the following:

🧪 How to Verify Your Contracts with Blockscout’s API (No UI Needed!) 🚀

🧱 How to Deploy Multiple Contracts in a Stylus Project – July 2025 Edition 🛠️📦

💻 Stylus on RISC-V (RV32IM) – Part 2: SDK Support, Missing Simulator Features, and a Sample Contract 🧬⚙️📜

Read on, and as always, if you have any feedback, please share it here:

To recap, Stylus is a Arbitrum technology for building smart contracts in Rust, Zig, C, and Go. Stylus lets you build smart contracts that are 10-50x more gas effective than Solidity smart contracts, with a much broader range of expressiveness from these other languages! Contracts can be written with no loss of interoperability with Solidity!

Click here to learn more: https://arbitrum.io/stylus

You can get started very quickly using a (community maintained) setup script at https://stylusup.sh, with official resources at https://arbitrum.io/stylus, or fully online, without any setup, using The Wizard at https://thewizard.app/!

How to verify your contracts with Blockscout’s API

It’s possible to use the Blockscout API to verify your Stylus smart contracts. Special shoutout to Rim Rakhimov from Blockscout for graciously sharing some information on how their system works behind the scenes! I’ve slightly edited what they sent me and collected it here. Read on:

Q. How does Blockscout verify Stylus contracts?

Currently, we support verifying Stylus contracts located in public GitHub repositories. The swagger file for our API endpoint to request the verification can be found here. The endpoint accepts the following fields: deployment_transaction, rpc_endpoint, cargo_stylus_version, repository_url, commit, and path_prefix

The path_prefix is required so that repositories with several contract crates inside can be used. The repository https://github.com/blockscout/cargo-stylus-test-examples contains some great examples.

When a user visits the verification UI, they provide the cargo_stylus_version, repository_url, commit, and path_prefix values. These values, along with the address of the contract being verified are sent to an Elixir backend. The backend retrieves the deployment_transaction from the internal database, and sends the data it collected so far, and the rpc_endpoint, to a separate verification service.

The service validates the repository, clones the repo the user provided, and checks out the commit hash. If path_prefix is provided, it enters the corresponding prefix, which is calculated relative to the repository root directory.

Stylus requires the Rust toolchain version to be specified in a rust-toolchain.toml file. The file has to be located inside the working directory.

For verification, Blockscout uses the command cargo stylus verify --no-verify --endpoint {rpc_endpoint} --deployment-tx {deployment_transaction}`.

We opt out of running verification inside in-stylus docker container by adding the --no-verify flag. We opt out because cargo-stylus requires the docker daemon to be run locally, but we would like to run the service and docker daemon on different machines. We implemented our own docker container startup process which allows us to run containers on the remote docker daemon.

cargo stylus verify prints the result into console. We parse the stdout and look for the line: "Verified - contract matches local project's file hashes". If found, the verification is considered successful, otherwise the verification fails.

If successful, we also attempt to retrieve the contract ABI as it is not returned by the verify command. To get the abi, we run the cargo stylus export-abi command, and parse its result. It prints the ABIs of all included contracts, and the verified contract ABI is printed as the last one. The name of this ABI is also used as a contract name inside a response.

If you need the code, the main function is implemented here: https://github.com/blockscout/blockscout-rs/blob/main/stylus-verifier/stylus-verifier-logic/src/stylus_sdk_rs.rs#L76

An example of making a request to the Blockscout API for verification:

curl 'https://arbitrum.blockscout.com/api/v2/smart-contracts/<address_hash>/verification/via/stylus-github-repository' -H 'Content-type: application/json' -d '{"cargo_stylus_version":"v0.5.6","repository_url":"https://github.com/OffchainLabs/stylus-erc20","commit":"d109002ffff3df7589cbbc92f8f104567a84f085","path_prefix":"","license_type":"none"}'

Thanks again to Rim Rakhimov and the Blockscout team for this in-depth explanation! Be sure to let their team know if you found the explanation useful. You can follow them on X at https://x.com/blockscout, and you can check out their Github organisation at https://github.com/blockscout. Blockscout is a fully open source, and community contributions are always welcome.

How to do multiple contracts in a Stylus project, July 2025 edition

I’ve seen some discussion lately in the community, with people wondering how to do multiple contracts in one location! At the time of writing, the 1.0 version of Stylus will include a feature that manages this for you. For now, we still have two ways to do this ourselves. Let’s review!

Multiple packages/Cargo workspaces

This is an approach I don’t prefer, after experiencing the frustrations of this with Longtail, the Superposition concentrated liquidity AMM.

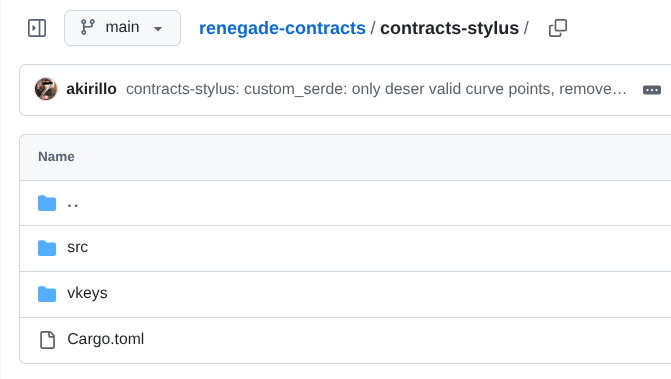

The trick is to use separate packages internal to the project using a Cargo workspace. An example of how this works in practice is to look at Longtail or Renegade. OpenZeppelin also take this approach with their Stylus contracts, as this method is the easiest to use if you’re vendoring dependencies for end users.

At the root of your repository, you would need a Cargo.toml file that indicates a workspace is in use, like so:

[workspace]

members = [

"contracts-stylus",

"contracts-core",

"contracts-common",

"scripts",

"contracts-utils",

"integration",

]

resolver = "2"Then, inside each contract folder, you would have a separate project for the contract you’re supporting:

Most tools in the Stylus ecosystem let you set the specific package during your deployment and use of the tools! I imagine this will see the most support in the reproducible build department in an ongoing way.

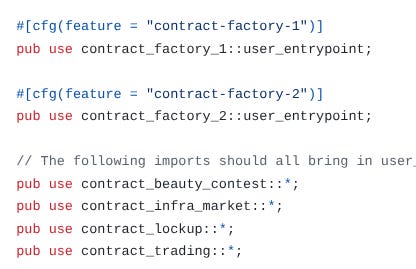

Feature flagging

It’s possible to use a approach based on feature flagging to support multiple contracts! We use a method like this for our prediction market 9lives. I prefer this method since comparatively it’s a lot simpler than using workspaces. The key is to use conditional compilation to import the user_entrypoint function depending on which contract is being built at the time. This is what our lib.rs entrypoint (partly) looks like:

So, if contract-factory-1 is set as the feature flag, then the user_entrypoint function that’s generated inside contract_factory_1 is imported, setting the entrypoint to the contract. This means, that we have to set the feature flag contract-factory-1 to get the entrypoint to the contract, so the compiled wasm file is the contract for the factory!

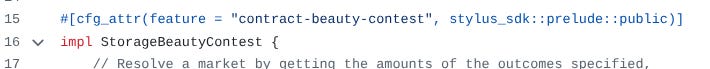

For contract_beauty_contest, (and contract_infra_market, and contract_lockup, and contract_trading), conditionally generating the user entrypoint by checking the feature flag results in a conditionally generated entrypoint:

Which is by default exported from this file. So, our import from above where we import everything, we can import the entrypoint this way.

Though I have a preference, the ideal approach depends on your team’s DNA. My team are sophisticated shell script operators, so, we don’t mind maintaining a Makefile that orchestrates the feature flagging, moving files around where appropriate. The problem with this approach is that we can’t parallel the creation, all compilation needs to happen sequentially.

I’m interested in seeing what people prefer over time, and for the new version of the SDK to come out with a better approach for this!

Stylus on RISC-V (32IM) Part 2 - The SDK and environment calls

This is a continuation of part 1 of this series:

It’s been a month! Wow, time flies! Let’s continue our support for RISC-V with the Stylus SDK. To continue our journey, we need to do the following:

Get the missing features from the EVM supported in our simulator. This includes the remaining host operations.

Get the SDK fully updated to let us generate binaries with RISC-V! Fix the entrypoint and the rest of the storage code! Release a forked SDK anyone can use as a dependency.

To recap what RISC-V is, and what we’re up to, RISC-V is a “reduced instruction set” instruction set architecture (ISA) that’s developed as an open source standard. An ISA is a set of instructions that a processor understands that you, an end programmer, provide by compiling your code written in a higher level language down to. So when you compile your Rust code (and it’s not to be run on Arbitrum), it spits out “native code”, which is code capable of being run with the native instruction set for your processor type. If you’re on a Mac, and you have a M4 chip, then you run the ARM instruction set, which is different from the instruction set my Intel i7 processor runs.

We’re interested in running Stylus smart contracts on the RISC-V 32 instruction set (with the Integer and base Multiplication extensions) since there was some discussion last month of running the entire Ethereum Virtual Machine on RISC-V. My goal is to potentially establish Arbitrum Stylus as the main programming environment alongside Solidity and Vyper in the Ethereum space, so my ultimate goal with this exercise is to eventually simulate RISC-V on-chain inside a WASM runner.

So far, we’ve developed a testing simulator for offline development in OCaml. In this article, we’ll support missing EVM features in the simulator using environment calls (where the guest running program can indicate to the CPU that the CPU needs to step in and do something before giving back control). We’ll also patch the SDK to support RISC-V as a compilation target, and release a contract for this.

Read on!

Missing features supported in the simulator

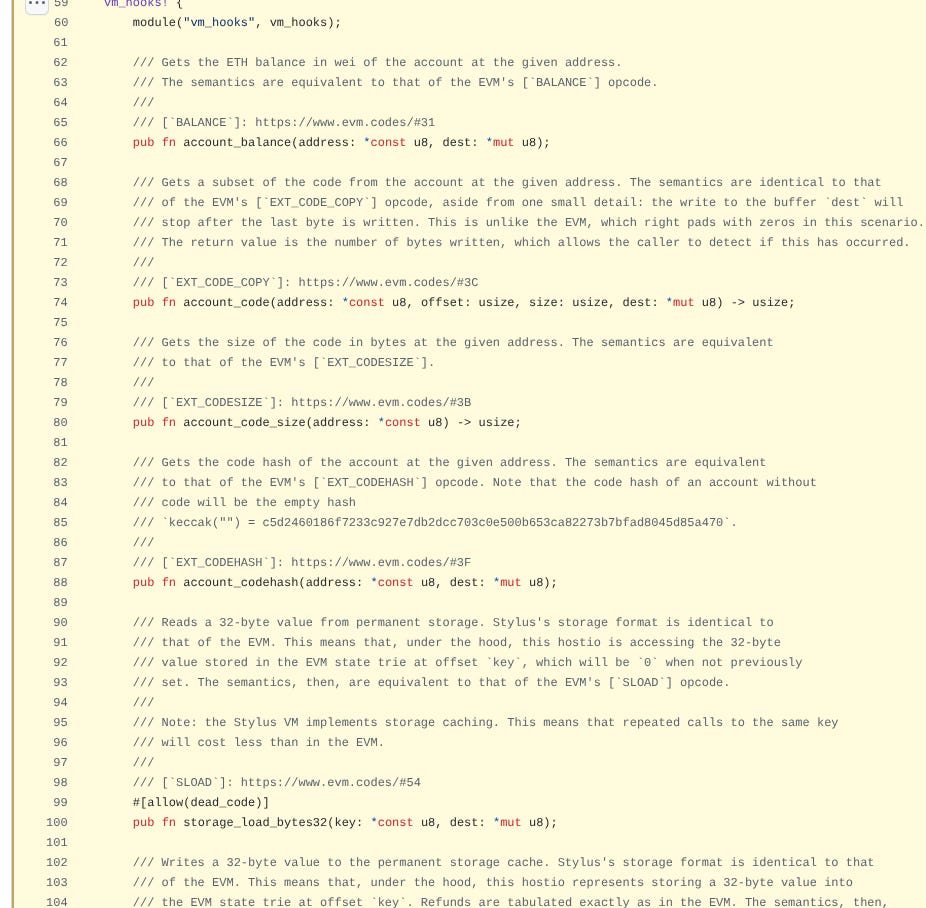

To do this, we’ll first implement the external functions that Stylus needs:

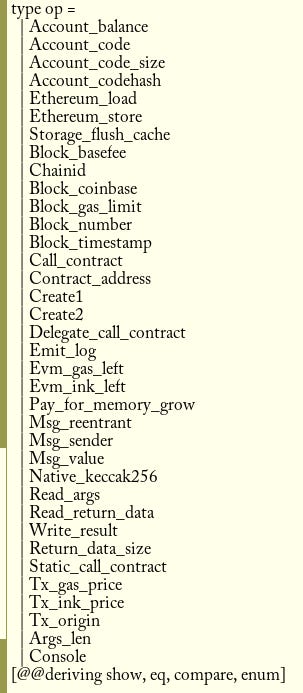

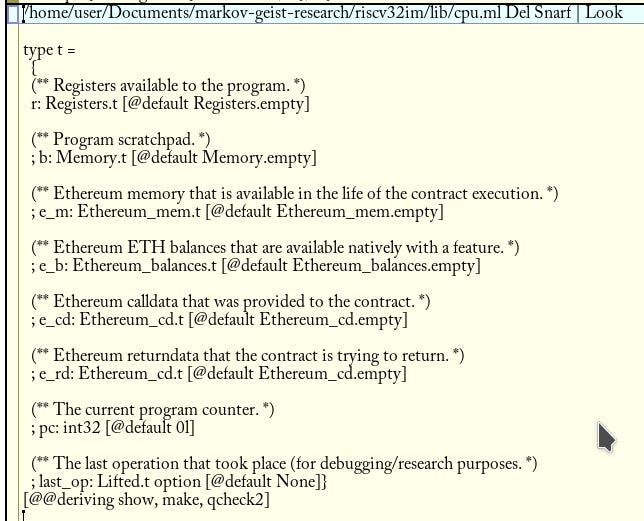

These are implemented by the host environment to support the EVM storage trie, calldata, and the classic calling operations. These are normally provided in WASM as external functions. We need to support them in our OCaml simulator by allowing a user to trigger a environment call with the A7 register set to an offset of the function number to use! Let’s create a new file, and define a type of each external function we want to support:

Note the “enum” preprocessor: this create a function that converts a integer to one of the variant fields here, and vice versa. Note that we add a helper console function, as well as a function for reading the length of the return arguments! We need it to match dispatch to our functions here:

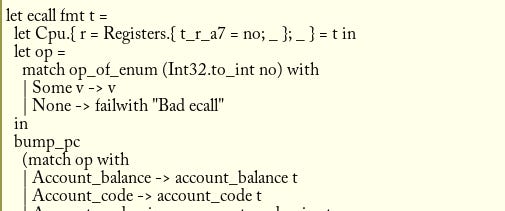

In line with the standard calling convention with RISC-V, we store the syscall number in the register A7. To accommodate the new supported EVM features, we need to update the amount of information available to our virtual CPU. We do so by adding a new file, which has this definition:

Note the extra fields beyond the memory that we need for the program from last time! Specifically, the “Ethereum_cd.t” type in the fields e_cd, and e_rd, which we use for the calldata and the returndata for the program respectively. We’ll service some of the external functions using these fields in the type for the CPU here.

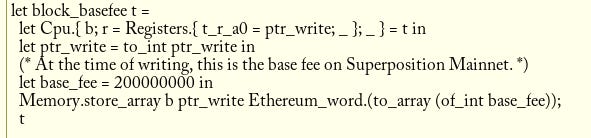

So a function supporting an environment call would look like this:

This function would create a U256 from the integer given (currently, the default setting that SPN Mainnet has), then set it to the location of memory given, equivalent to the host features that the WASM runner would implement.

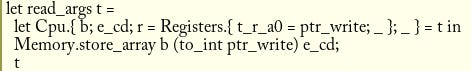

Reading the arguments is similar. We simply read from the custom type that contains the calldata:

(* Ethereum_cd.ml *)

type t = int32 Array.tVery simple! We need a function that lets the guest RISC-V program set the returndata type:

Using the A0 and A1 registers is how we find the read pointer, and the length. This pattern will repeat throughout our implementation, in line with the standard calling convention with RISC-V.

You can read the repo to see the extent of the functions that we implemented here.

Tweaking the SDK

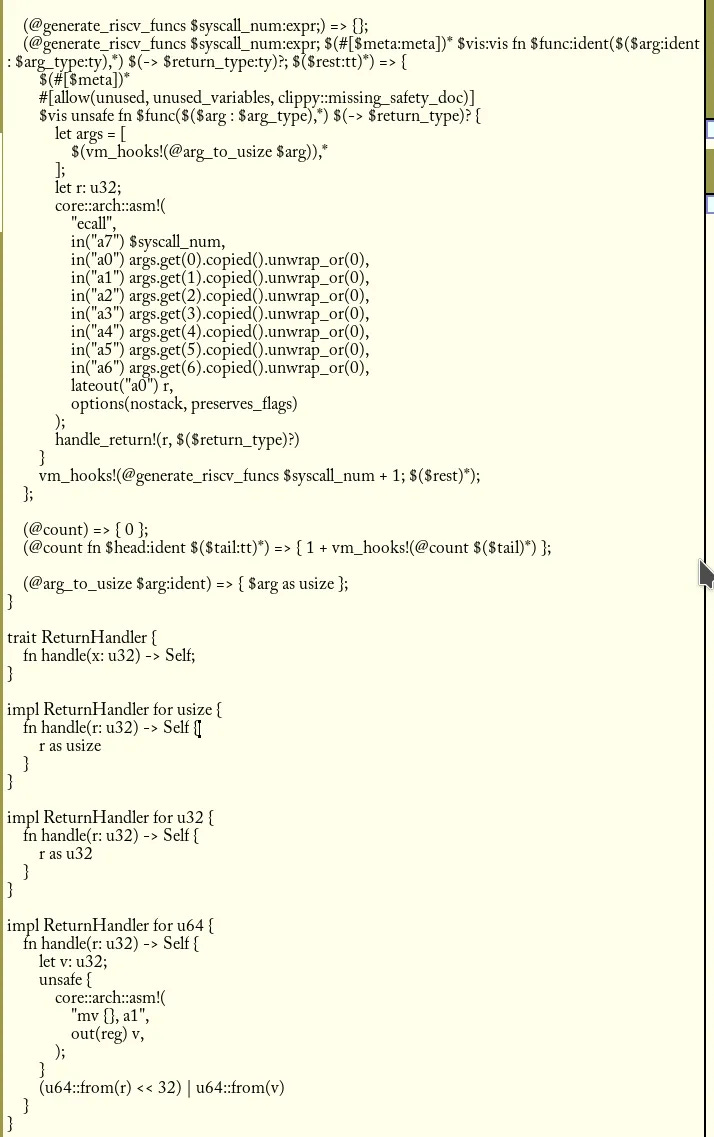

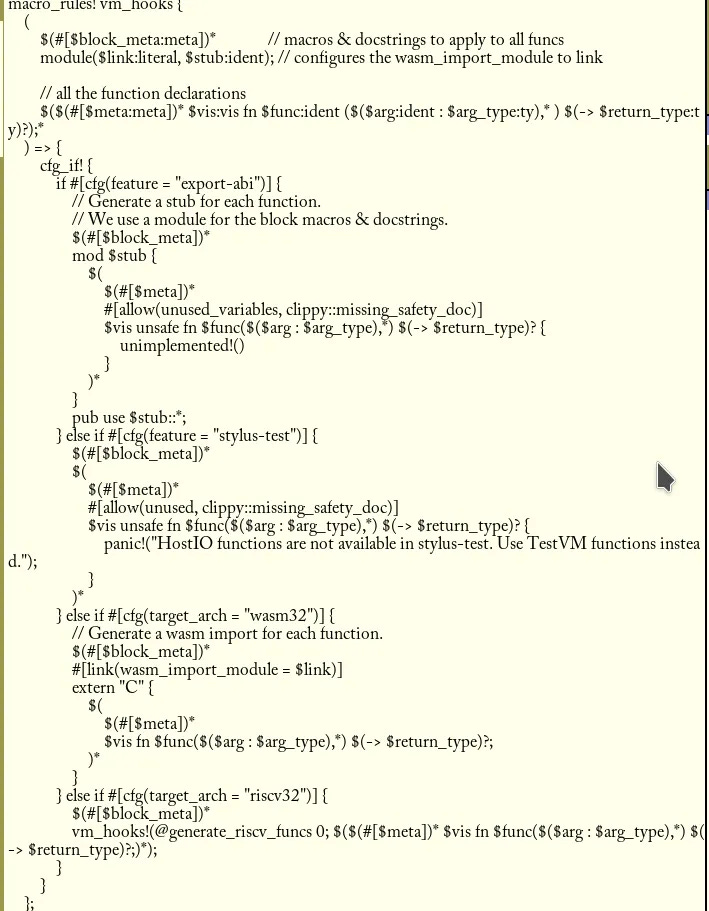

Now that we’ve got a reasonable implementation of some of the foreign functions that the contract needs, we can start to support RISC-V in the SDK. We need to extend the vm_hooks macro, to add branching for the RISC-V target that copies the arguments that the functions would provide to the external function as arguments to the registers, in line with the calling convention.

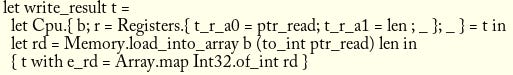

This means code like the following:

This code copies the arguments from the functions given after making a slice of the arguments (in their u32 type as usize type), and sets the registers with it. It invokes the associated function based on a count that increments per number. It generates functions that read the registers and reconstruct them based on the arguments.

This new macro spits out code like this for the RISC-V target:

For the above contract address, this is simply a pointer to the offset for the 20 byte address to be written. A better implementation of the macro would set the single field, as well as the syscall number, instead of setting every argument for the standard calling convention. A problem this macro has is that an argument with a u64 is simply cast to the u32 type, instead of leveraging an extra register implicitly.

We needed to also make some changes to the Cargo.toml file to accommodate the bytes package, and some dependency on arc. I also needed to upstream a patch to dyn-clone to accommodate target_has_atomic since we don’t have access to anything involving sync (RISC-V 32IM is a tier 3 target with no parallelism).

Our first (somewhat legit) contract

Having adjusted the SDK, and added features to the simulator, we can write our first contract! Inside the risc-hello-world contract, we can create the following:

// src/main.rs

#[entrypoint]

#[storage]

struct Storage {

pub message: StorageString,

}

#[public]

impl Storage {

pub fn hello(&mut self) -> String {

self.message.set_str("Hello!");

self.message.get_string()

}

}

#[export_name = "_start"]

pub extern "C" fn _start() -> ! {

unsafe {

let heap_start = HEAP.as_mut_ptr() as usize;

let heap_len = HEAP.len();

ALLOCATOR.init(heap_start, heap_len);

}

let len: usize;

unsafe {

core::arch::asm!(

"ecall",

in("a7") 34,

lateout("a0") len,

options(nostack, preserves_flags)

);

}

let status = user_entrypoint(len);

unsafe {

core::arch::asm!("mv a0, {}", in(reg) status);

}

unsafe {

asm!("ebreak");

}

loop {}

}

#[panic_handler]

fn panic(_info: &core::panic::PanicInfo) -> ! {

unsafe {

core::arch::asm!("mv a0, {}", in(reg) 2);

}

unsafe {

asm!("ebreak");

}

loop {}

}We create a simple allocator (we defined this in the previous post), and set it up in our entrypoint. We call a custom function at number 34 (args_len) to get the length of the calldata argument, and then we set the contents of A0 after the execution to the variable len.

Knowing the length lets us invoke the user_entrypoint function that the code normally generates! This will let us pick the entrypoint, in this case the function “hello()” which returns a string! Our program sets “Hello!” to the storage, and then reads it off, returning it.

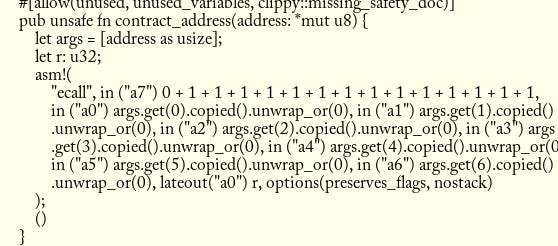

The user_entrypoint function reads the contents of the arguments, using the read_args in memory it provided to our RISC-V simulator. It then returns the status, which we set to the register A0. Among our changes here, we also changed the simulator to check the value stored in the A0 register when exiting:

Any calls to the write_result(data: *const u8, len: usize) function invoked by the user_entrypoint code will set the return value for the contract. The function that sets the return data will set the e_rd field in the record that we have in the Cpu.t type. Our program then prints and returns it for us!

Running the simulator

We can run this code now! The RISC-V runner also includes a argument that we can provide that sets the sender. We can run the copy of the test code inside the simulator repo. This contract will slightly differ from the above, so that we can supply an argument of the string to set to the storage:

#[entrypoint]

#[storage]

struct Storage {

pub message: StorageU256,

}

#[public]

impl Storage {

pub fn hello(&mut self, x: String) -> String {

self.message.set(x);

self.message.get()

}

}First, we build:

dune build bin/main.exeThen we run:

./_build/default/bin/main.exe test/risc-hello-world $(cast calldata 'hello(uint256)' 456)Which returns:

0x0000000000000000000000000000000000000000000000000000000000000020000000000000000000000000000000000000000000000000000000000000000548656c6c6f000000000000000000000000000000000000000000000000000000The operation $(cast calldata 'hello(string)' Hello) substitutes in the argument list the calldata for “hello(Hello)” and the argument we want, which is 0xa777d0dc0000000000000000000000000000000000000000000000000000000000000020000000000000000000000000000000000000000000000000000000000000000548656c6c6f000000000000000000000000000000000000000000000000000000! This is provided to the program as calldata by setting the e_cd field in the simulator!

What’s next?

We need to fix our simulator to not use libbinutils to read the ELF section headers, so that we can support release generated (stripped) builds. This is a problem for my system since I’ve had some issues linking against my preference library (libelf) for this, and I don’t want to roll support myself natively. For our research simulator, it would be nice to support multiple contracts, and transactions/blocks/mining. It would be nice to support the compressed and vector extensions to RISC-V as well, so we can start to support our silly little simulator as a nice research tool for the respective ISAs. We could even support a coprocessor for large words (or something)!

We also need to move our allocator to a crate, identify any problems with it (the heap being small?), and streamline the development experience of working with RISC-V this way. The ultimate goal here though is to simulate RISC-V 32IM on-chain in the WASM on-chain environment to show that the performance penalty is acceptable, and to build awareness and establish Stylus as the main programming environment in the alt EVM space!

Stylus Saturdays is brought to you by… the Arbitrum DAO! With a grant from the Fund the Stylus Sprint program. You can learn more about Arbitrum grants here: https://arbitrum.foundation/grants

Follow me on X: @baygeeth and on Farcaster!

Side note: I develop Superposition, a defi-first chain that pays you to use it. You can check our ecosystem of dapps out at https://superposition.so!