Building a chain agnostic dApp with Stylus using state channels, and introducing Valley Game! Part 1: the foundation

22 July 2025. Introducing the "Planning Poker but for valuations" houseparty game.

Hello! Bayge here. Inspired by recent events of Robinhood launching on Arbitrum, in this article, we’re going to begin building a complete dApp from scratch including a backend, contract, and frontend, using a state channel technique with Stylus for a fully chain-agnostic experience without gas fees!

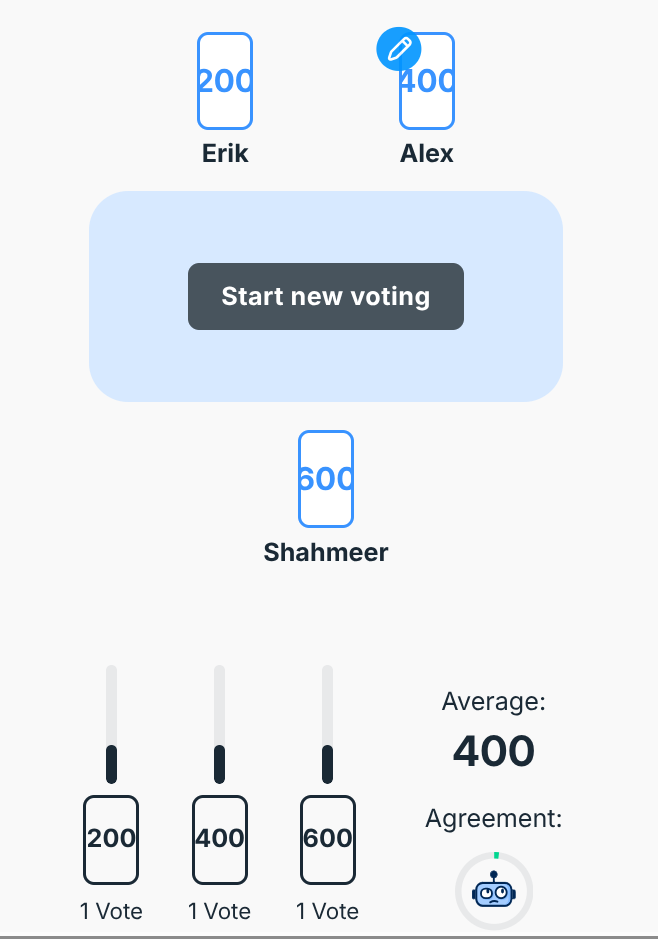

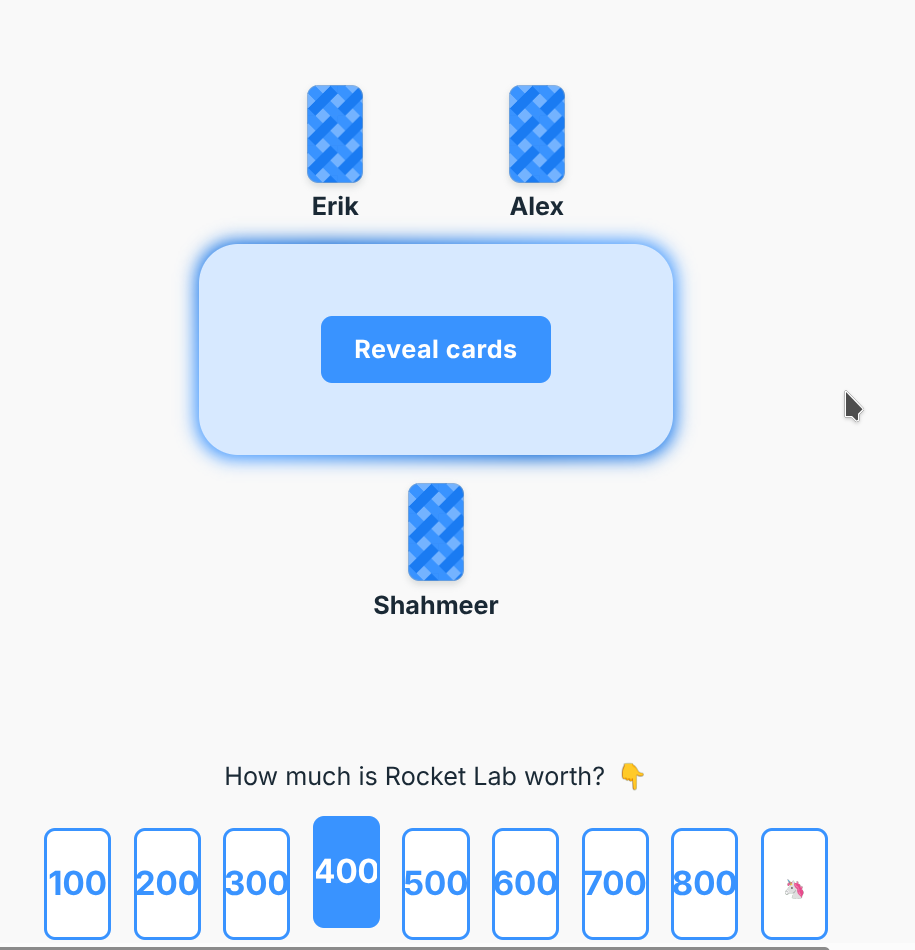

This will be a new type of game for VCs and partygoers, where players work to discover the true value of a fictional or real company. Like planning poker, but for valuations!

Planning poker is a project complexity estimation tool where developers estimate the complexity of a task privately, then revealing at the same moment. The developers repeat this game until they find consensus of the time it takes to complete a task. This makes planning poker a very useful tool for discovering misconceptions about project timelines.

In this part 1 article, we’ll be:

Writing state machine diagrams 📊🔄 for the user journey and technical story.

Creating a recursive game playing type 🔁🎯 for the contract. Since this is a state channel/validium/rollup, depending on your nomenclature use, we'll be compressing a recursive structure for submission on-chain to trigger side effects. We will use a UTXO style of optimistically consuming Layerzero 🌐⚡ on the server side, and deposits with a debt derivative 💰📈 for a frictionless user experience.

Building a GraphQL backend and database 🗄️🔧. We'll be building a AWS Lambda function ☁️⚡ that works with a Postgres database 🐘 to store signatures and game state.

Defining the contract entrypoint 📝✨. We'll be defining a high level interface with the types, then starting to build it and discussing the implementation! 🚀💻

Finally, following these steps, our next post will create the frontend and complete the contract! For now, we’ll focus entirely on the backend and broad strokes! Read on!

I’m excited to announce Valley Game, a game and tool like planning poker, but for valuations!

The game is either played in a fictional setting, with a fake company, or a real world company in a limited setting.

Users play rounds of Valley Game, and are awarded points based on how close they are to each other each round!

In the images above, the players would play 4 more rounds of this. Then a fictional token would be divided up by the points distribution!

Points are also awarded retrospectively based on how close a user was at the beginning of the game to the final outcome.

It is played entirely in a rollup using a neat state channel compression technique we’ll discuss and develop in this post (meaning zero gas fees per transaction except when onramping and offramping funds, and instant confirmation), and is optionally played with real money with some token I’ll pick from the FC community, and playable using Farcaster using Ben Greenberg’s Farcaster template, which we’ll develop with with a live video of doing so in a later post.

Users can play from any chain, including Arbitrum, Base, and Superposition, with no degradation of experience, by leveraging “satellite” contracts on the chains supported, and the state channel technique developed in this post. We’ll also be discussing support for web2 users on-ramping using Stripe.

Since no actual numbers are ever specified in this game, it’s entirely subjective based on the audience, making this a great game for repeated play! The game will never tell you what the valuation should be, the audience imagines it entirely by itself. It’s also illustrative of a unique approach to oracle design.

While the game itself is intended to be played with fictional companies, a mode is supported where real companies are used, and this could perhaps be used in an environment where . The playing team could make a prediction for a year from now, and state their estimation natively using Farcaster!

You can find the repo here:

The user story

This game is played as a state channel that’s opened after a deposit to a contract on Superposition Mainnet. The deposit of the asset on the contract triggers the creation of a spendable UTXO input. Our Stylus contract validates compressed signed calldata, and releases funds when a signature and transaction is provided to do so, all stemming from user consumption of the UTXO input. The application runs optimistically across multiple chains, validating optimistically on the server side with deterministic creation of the balance UTXO coin for users when a transaction enters the Layerzero transaction pool.

Quick interlude: What’s a UTXO? A UTXO input is a asset that can be “spent” to produce an “output". Funnily enough, we introduced coins in the last post when we discussed Sui: https://stylus-saturdays.com/i/167568457/objects-and-parallelism ← check this out for more!

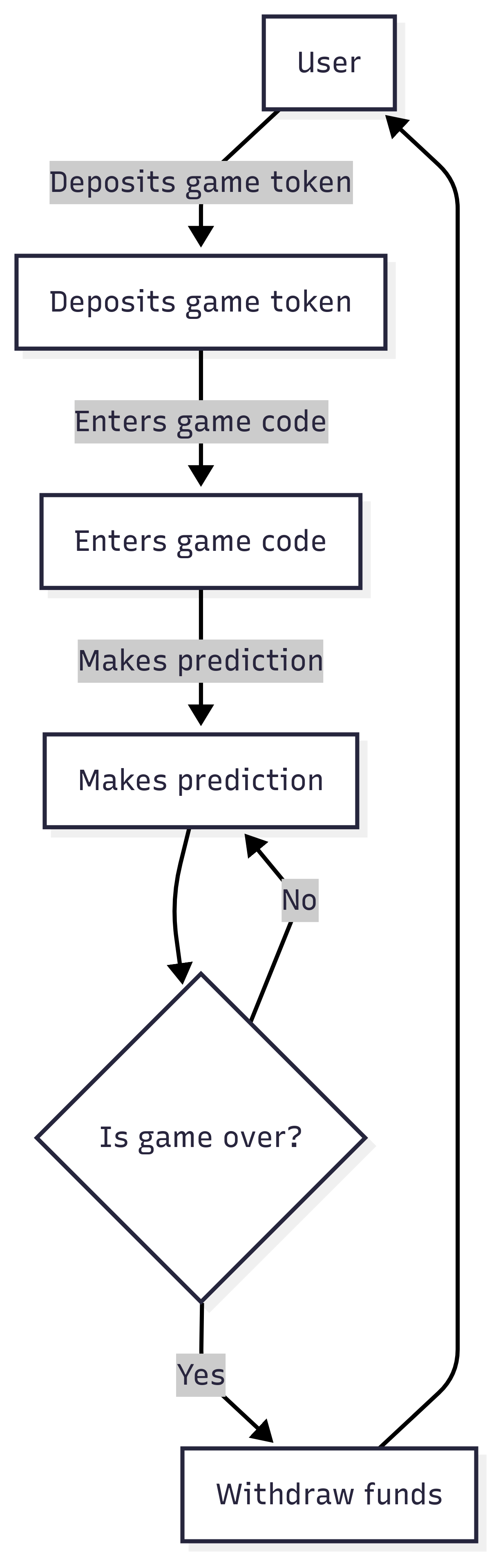

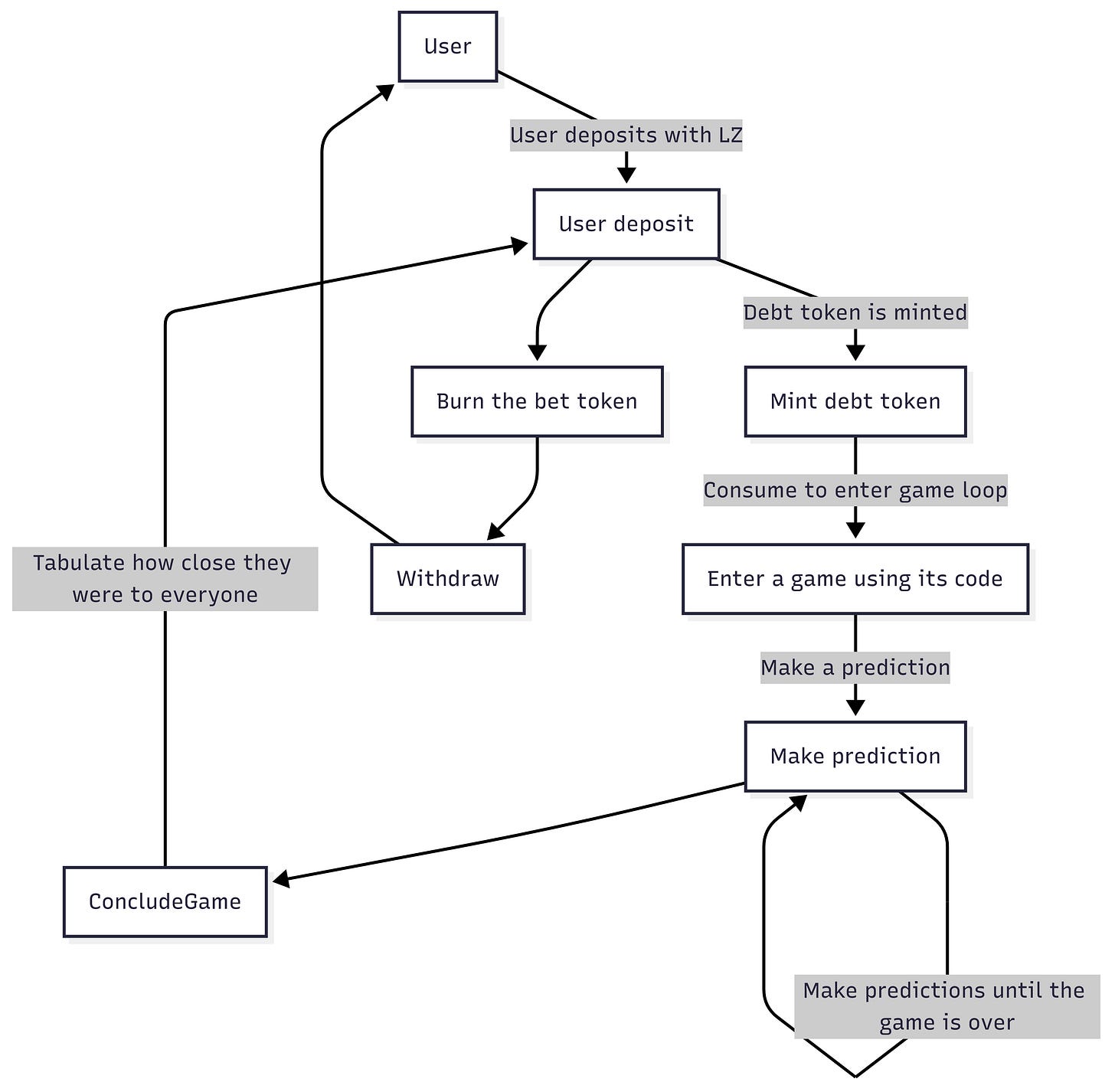

To begin with, we’re going to implement a user-centric user journey without discussing the randomness and variation that would need to be injected into the game for fictional play, so just focusing on making a game that is played more than once with points allocated for how close users are. It should look like this:

So basically, a user opens the game, makes a prediction alongside everyone else, then they aggregate everyone’s predictions. The user plays back and forth inside the “Makes prediction” stage, dependent on some state, then the user withdraws their funds from the contract.

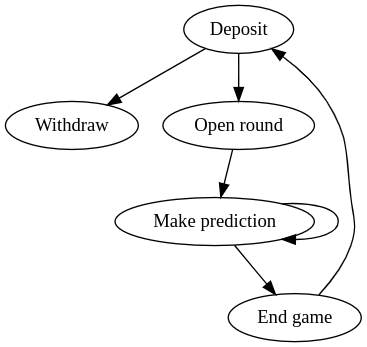

With a UTXO-style pattern of allocating a balance object then consuming it later that’s similar to what’s going to come live in Longtail Pro Passport in the near future, we can mock a user state machine like this:

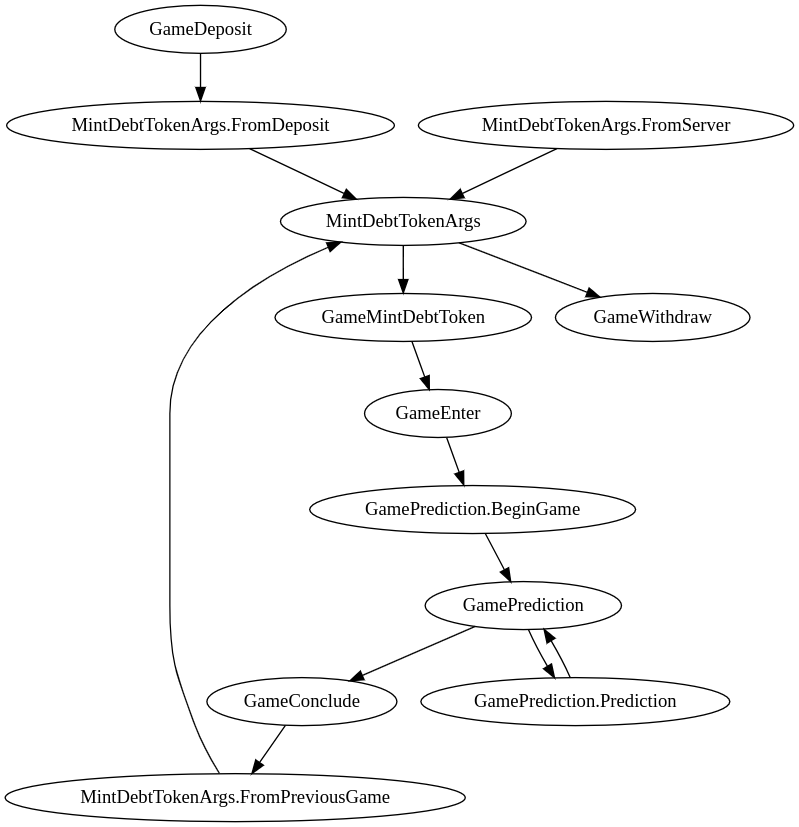

Which would actually resemble in terms of a technical user story the following:

So, we allow users to deposit their asset, we mint a debt derivative that could be converted to the underlying asset at any time, and we let them play the game back and forth for a while before returning to where we started. Once the user is at square one, we consume their debt token to send them back their asset optionally, or we allow them to reenter the game. It’s important that we mint a debt token. Minting a debt token is a tool that allows you to underwrite your token with a custodial asset to support web2 users, without minting friction. This is consistent with the social goals of this project (supporting web2 users with no crypto wallets and in-browser signatures). This exposes your project to bank runs, so it’s important that you shore up your on-chain position to be consistent with what’s available in circulation inclusive of your web2 users, and that you keep your signing process for creating assets very safe.

For a UTXO-style system with compressed messages, we need to support a join operation at least for deposits (where a user has multiple games completed and they want to keep playing without leaving). We can skip implementing a split, assuming that user deposits are full of intent and that they don’t plan on reducing their allocation after making the deposit with funds.

Application pseudocode

Let’s implement some pseudocode in Python. We’ll make our game state resemble the following:

class Game:

"""

The current round the game is on.

"""

round = 0

"""

Simple knowledge of who has the right to participate in this

game.

"""

players = {}

"""

The predictions made by users at each step of the game for

five rounds. The key for each round is their unique id. Stored

privately from each player's perspective.

"""

player_predictions = [{} for _ in range(5)]

"""

The amount that every user invested to play the game.

"""

fixed_buyin = 5

"""

A fixed size circular buffer for messages. Maybe 200 items long.

"""

messages = []

"""

Points awarded for each bip based on how close the user is to

the mean (1 bip = 0.1%).

"""

bip_points = 100Like a lot of games, there’s a lack of incentive for users to invest large amounts unless others do it first. For this game, we’ll implement a fixed buy-in from every participant, and scale the number of rounds according to the amount at stake. This lets us discover a skill level of sophistication, enriching the game structure itself through observation. For our game here, we’ll require a buy-in of $5 of our concept token.

We’ll mock this game as a evolutionary algorithm later. An evolutionary algorithm is a set of programs that run inside a shared space that each feature a fixed buffer of DNA. DNA are functions that operate on a bit of internal state to the program, which could include in this case a amount of information stored in a circular buffer, and information on whether other players have made predictions. The DNA won’t have any information extrinsic to the generated code itself except a shared buffer. The program’s performance is quantified, and at the end of the game, the top performers of a game played amongst randomly generated programs are combined together randomly to create new programs, until the top programs emerge naturally from randomness.

For our game, in the state we’ll support a broadcast layer of messages that can be sent amongst the players. In the fictional game, this could be the fake evidence list about the company (ie, their competitor was acquired by a bigger company, or their founder was embroiled in a scam, generated at random), but in the game that we’re designing where the randomness injection is outside the scope, this could be used for program messaging amongst themselves.

This will enable our generated algorithms to send tagged Lisp-style lists similar to the following amongst itself, and reuse this structure for information updates in our game:

(<topic> <message>)Then support List accessors like this:

def unpack_last_msg(field, depth)So that our generated programs can later unpack and understand messages that were sent inside the game, using it like a scratch pad of sorts for memory. This lets us keep our state pretty small, having the programs interrogate each other for information, and only learning by encoding their performance in their program code. However! We won’t be including this on-chain, since this isn’t relevant to the contract’s final rolling up of decision made during the course of the game.

The code gameplay loop looks like the following for the round deposit. We’ll award 100 points multiplied by a certain number of bips (how many percentage points the user is distant from the average):

exception GameOver(Exception):

pass

class GameOver(Exception):

pass

def merge_record(a, b):

return {k: a.get(k, 0) + b.get(k, 0) for k in a.keys() | b.keys()}

class Game:

...

def calc_points(self, avg, p):

return max(0, self.bip_points - abs(avg - p))

def buyin(self, user):

"""

Buy in an amount, only possible if the game is the first round.

"""

if self.round > 0: raise GameStarted()

self.players[user] = True

def predict(self, user, value):

"""

Lock in the prediction for this round. Bump the round if we hit

the limit of the participants right now.

"""

if self.round == len(self.player_predictions): raise GameOver()

self.player_predictions[self.round][user] = value

if len(self.player_predictions[self.round]) == len(self.players):

self.round += 1

def proximity_points(self):

"""

Get the points for each user at the current round.

"""

acc = {}

for predictions in self.player_predictions[:self.round]:

mean = sum(predictions.values()) / len(predictions)

for k, p in predictions.items():

acc[k] = acc.get(k, 0) + self.calc_points(mean, p)

return acc

def early_points(self):

"""

Get the points the user is owed for how close they were at the

beginning to the end consensus.

"""

# Short circuit if the game isn't over yet.

if self.round < len(self.player_predictions):

return {k: 0 for k in self.players.keys()}

first_predictions = self.player_predictions[0]

final_predictions = self.player_predictions[self.round-1].values()

m = sum(final_predictions) / len(final_predictions)

return {k: self.calc_points(m, first_predictions[k]) for k in self.players.keys()}

def points(self):

"""

Get the points for each user at the current round.

"""

return merge_record(self.proximity_points(), self.early_points())

def allocate(self):

"""

Return the funds each user should receive as a result of the

game.

"""

available = self.fixed_buyin * len(self.players)

p = self.points()

all_points = sum(p.values())

return {k: available * (v / all_points) for k, v in p.items()}This is a simple reference, reusing the bip calculation for the end of points calculation. Games will be played where the first action is the most valuable most likely, but will also create a dynamic where maybe the leading player will be targeted by the other players if they are in the lead by the end of the game, creating a interesting dynamic I imagine for adversarial play. This code is so simple we could probably implement it with a APL one liner.

State channel architecture

Since this is a game with UTXO-like on-ramping and spending of signatures, we need an approach to our database that accommodates the storage of signatures that users supply, after verifying it ourselves.

First, let’s flesh out our user journey from before with another type:

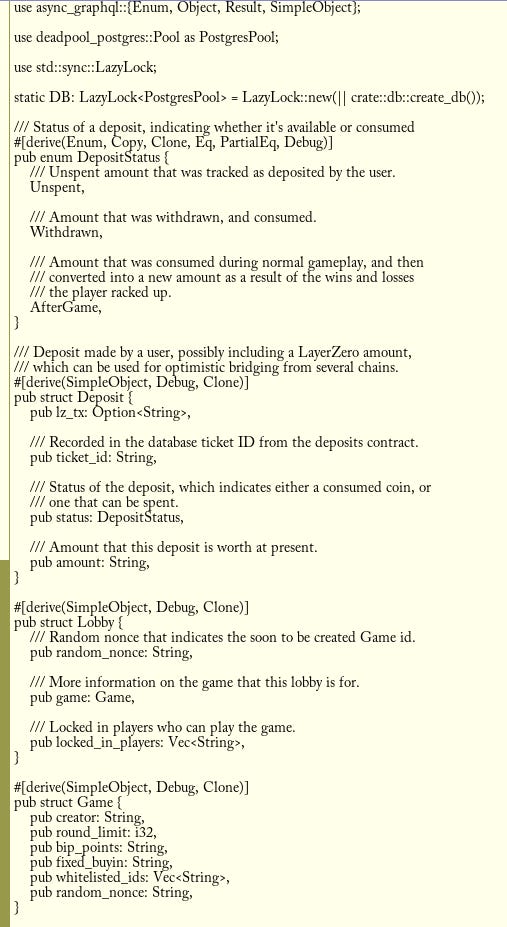

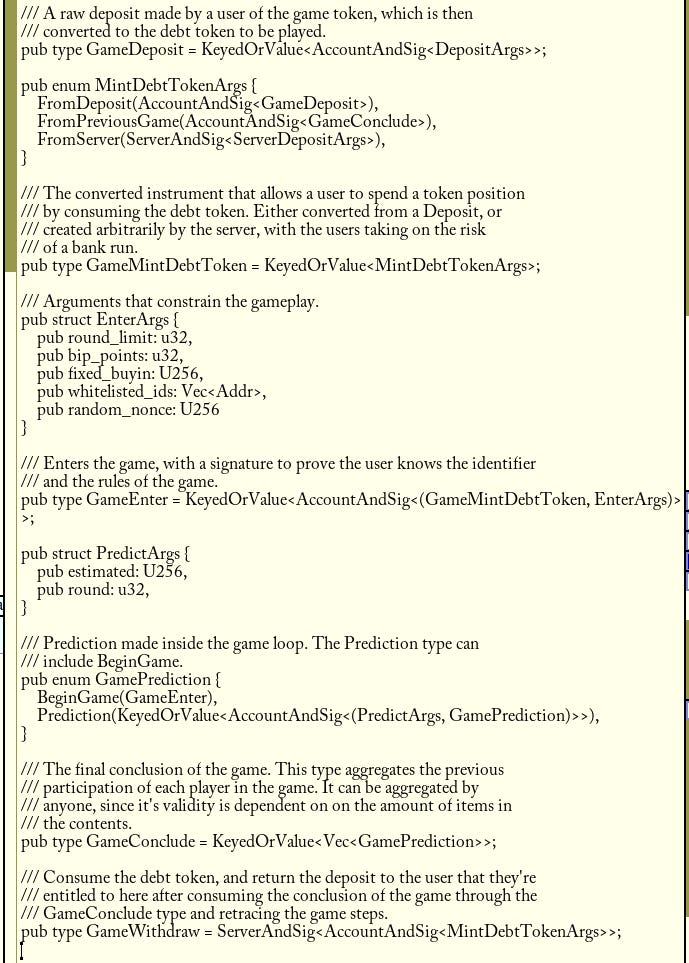

This type is literally converted to calldata. Users sign at every stage of the process. In Rust, the types would look like the following:

For signature handling in our code, we use ed25519. This lets us benefit from WebCrypto in the browser, and there is decent support in the Rust ecosystem with the ed25519_dalek package. The key can be created in the browser and remembered using cookies, then included in a signature with Permit to a contract to bond and allocate funds. This is similar to what we use for Longtail Pro’s passport frontend, and it results in a seamless experience for the most part, powered by Stylus.

There are some tools here that we’ve introduced for compression’s sake, including information on who should be signing what, and whether it was previously seen on-chain, but I’m not including this in the above screenshot. Hopefully you can see how the state machine works here! The reason for this approach is that it’s completely offline until it’s finally aggregated at the end of the user journey. In the withdraw step, we ask for the server-side signature as well as the user signature. This lets us prevent abuse from taking place, like a user withdrawing their spent balance after they’ve started to accumulate a losing position.

A note on the encoding taking place: we’ll use Borsh encoding that we’ll supply as a bytes argument to the functions here. The reason for this is to maintain some level of composability with the rest of the contract ecosystem, though this is technically a redundant action, as you could build the entrypoint to the code yourself as switching on a enum field, as we do in Longtail Pro’s codebase. It’s not necessarily the most efficient approach, since the encoding of the length will be repeated somewhat, but for our example, it’s the best way, since we don’t need to overload the entrypoint.

The backend/database architecture

In the spirit of reuse, we’ll be using Rust for the entire package of this application, but generating GraphQL code to be run on AWS Lambda. So, we need a persistent place to store the aggregated calldata to send to users on request.

A simple series of Postgres tables should suffice for storage, and we’ll add some helper types to make storage more explicit:

-- 0x + [u8; 32]

CREATE DOMAIN ED_ADDR AS CHAR(66);

-- 0x + [u8; 64]

CREATE DOMAIN ED_SIG AS NUMERIC(130, 0);

CREATE DOMAIN HUGEINT AS NUMERIC(78, 0);

CREATE TABLE valleygame_game_info_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

round_limit INTEGER NOT NULL,

bip_points INTEGER NOT NULL,

fixed_buyin HUGEINT NOT NULL,

whitelisted_ids JSONB NOT NULL,

-- This is also used externally to convert to this game's id.

random_nonce HUGEINT NOT NULL

);The round limit will be the amount of rounds that we can run before the game is considered finished, and the bip points (and the rest of the code) is like the reference in Python. The random nonce is needed to avoid a signature reuse situation, and whitelisted IDs are used to allowlist only a fixed set of users to play the game. These fields are stored in JSON to avoid having a one to many table relationship (and multiple insertions). We can simply read the column, then unpack it ourselves as JSON.

As a development practice, I like explicitly versioning my tables, and building views over raw event data being ingested in as opposed to mutating tables. This lets us add additional features but maintaining the ability to roll back quickly, and to support versioned APIs and versioned encoded datastructures. You can see this in the naming convention.

Let’s create some tables to accommodate the creation and spending of deposits and amounts:

-- Server-side deposits that were created from a centralised source.

CREATE TABLE valleygame_server_deposits_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

recipient ED_ADDR NOT NULL,

amount HUGEINT NOT NULL,

nonce INTEGER NOT NULL,

sig ED_SIG NOT NULL

);

CREATE TABLE valleygame_mint_debt_token_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

from_deposit_ticket HUGEINT,

from_previous_game_id SERIAL,

from_server_id SERIAL,

signer ED_ADDR NOT NULL,

sig ED_SIG NOT NULL,

FOREIGN KEY (from_previous_game_id) REFERENCES valleygame_game_info_1(id),

FOREIGN KEY (from_server_id) REFERENCES valleygame_server_deposits_1(id)

);

CREATE TABLE valleygame_enter_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

-- We get the args from the game info when someone asks for this.

game_id SERIAL NOT NULL,

FOREIGN KEY (game_id) REFERENCES valleygame_game_info_1(id)

);Here, we do have a one-to-many table relationship, as we need to record on-ramping/creation of the deposit asset. We overload the “debt token” table to have multiple, possibly null, fields that include the originating source of the token, which we convert to the serialised type that we saw earlier.

CREATE TABLE valleygame_prediction_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

game_id SERIAL NOT NULL,

estimated HUGEINT NOT NULL,

round_number INTEGER NOT NULL,

signer ED_ADDR NOT NULL,

sig ED_SIG NOT NULL,

FOREIGN KEY (game_id) REFERENCES valleygame_game_info_1(id)

);

CREATE TABLE valleygame_withdraw_1 (

id SERIAL PRIMARY KEY,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

debt_token_id SERIAL NOT NULL,

user_signer ED_ADDR NOT NULL,

user_sig ED_SIG NOT NULL,

server_sig ED_SIG NOT NULL,

FOREIGN KEY (debt_token_id) REFERENCES valleygame_mint_debt_token_1(id)

);You can see that this is almost one-to-one with the types we defined above!

Having defined the database types, we can start to look at setting up the repo structure for our code. Then, we’ll implement the code that does the verification and the storage of the data.

Unlike some of our previous posts, we won’t implement this in the most verbose way. We’ll instead focus on the structure and broad strokes of the implementation, then elaborate further with the video that you can see at the top!

We’ll create three entrypoints in the Rust code:

[[bin]]

name = "contract"

path = "src/contract/main.rs"

[[bin]]

name = "frontend"

path = "src/frontend/main.rs"

[[bin]]

name = "graph"

path = "src/graph/main.rs"The contract and frontend entrypoints will run as wasm32-unknown-unknown targets. The contract will be built with the contract deployment on-chain in mind, but the latter will use exported code to access the browser APIs using wasm-bindgen. graph will be run as a Lambda function, and as such will run natively on my laptop.

Implementing the backend

We start to implement the backend by generating a GraphQL schema consistent with the type we defined above. In our game, the server functions more like a storage backend for the signatures as they’re aggregated (and some validation mixed in to save time for the client, including checking deposits when they’re spent), with the frontend dressing up what’s available to be presentable in a format consistent with a game.

We implement a schema:

enum DepositStatus {

Unspent,

Withdrawn,

AfterGame,

}

type Deposit {

lzTx: String

ticketId: String!

status: DepositStatus!

amount: String!

}

type Lobby {

randomNonce: String!

game: Game!

lockedInPlayers: [String!]

}

type Game {

creator: String!

roundLimit: Int!

bipPoints: String!

fixedBuyin: String!

whitelistedIds: [String!]!

randomNonce: String!

}

type Prediction {

amount: String!

addr: String!

}

type Round {

ongoing: Boolean!

predictions: [Prediction!]

}

type Ongoing {

rounds: [Round!]!

game: Game!

}

type Query {

lobbies: [Lobby!]!

ongoing: [Ongoing!]!

deposits(addr: String!): [Deposit!]!

}For brevity’s sake, this schema is lacking comments. The DepositStatus type is indicative of the state of a UTXO, whether it should be considered spent, or available to be used in its original form. It might have changed its value after a game has been played, at which point the amount in it should be rendered differently to the end user.

The Deposit includes information about a Layerzero transaction, which could be used to optimistically progress a interaction, assuming that the underlying token was successfully bridged. If the bridging does not take place, then the submission of the calldata and verification of amounts would fail on the base chain, assuming that LZ’s sending would fail. This is a nice UX hack with a degradation in a worst-case scenario of a fork or something taking place, which is not very likely in practice.

A Lobby is made available with information on the game as well as any players that committed to play. A problem with the approach of this system is that users choosing to be unavailable causes a degraded situation where the funds are stuck. We won’t be addressing this here, though a trivial solution is to include an optimistic submission stage at the end of the game, where a user can stake their token on liveliness from each player. Players that fail to submit at the time will lose their tokens to the staker, but if every user submits (all the way up to the completed game), then the staker themselves will lose the funds.

Finally, the Ongoing type is available to include information on ongoing games that the user is participating in based on their Authorization header. This includes Rounds that were played, and the predictions made by each user.

I implemented the schema manually (pain), then chatted with the database using boring raw SQL. I’m lucky that with Claude in a conversational format it handled the translation of my schema for the most part. Parts of the code look like the following:

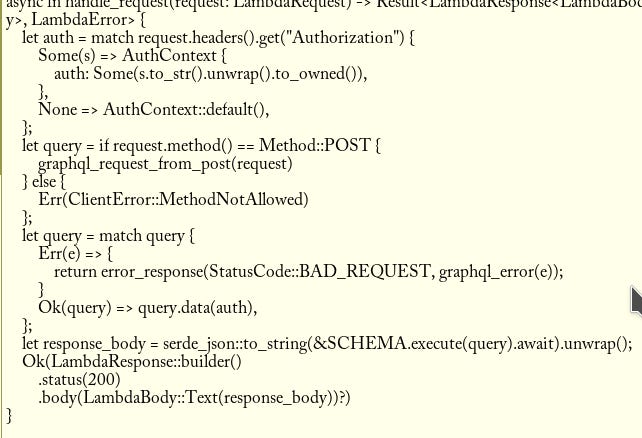

These types resemble the schema from above. In other places, we set up a database pool that we use with Postgres, and later in the code we have the implementation for the looking up of these values. We also start to define an entrypoint inside the Lambda graph to run inside AWS Lambda:

This is some basic setup code to allow us to use the Authorization header for our schema to branch the loading of the lobbies request using the database.

Implementing the contract entrypoint

Finally, let’s define the entrypoint to our contract! Let’s define an interface like the following:

interface IValleyGame {

function nonces(address spender) external view returns (uint256);

function deposit(uint256 amount, address recipient) external view returns (uint256);

function play(bytes calldata) external;

}The first function is simple nonce management. It’s useful for optimistic depositing of funds using Layerzero messaging, by having the user simply initiate the process of the deposit, since their nonce can be combined with their address to create the deposit ticket. The second function is a simple interface for depositing the native asset using the deposit ticket. It simply takes the amount, and the creditor address. The issue with this approach is that a griefing vector lies here, but we’ll continue as-is for now.

Finally, the play function is the actual interaction with the contract. This is the bit of code that takes the Borsh-encoded data we’ve seen earlier and generates the side effects by stepping through the rolled up calldata!

We’ll have to pick this up next time! I’m out of time for now.

I like games with emergent social properties. Games like this include Nomic and Aneurism IV, where players themselves create the structure of the gameplay themselves. In the next post, we’ll build the rest of the contract, including the functions that translate the local state to side effects inside a reimplementation of the pseudocode. Maybe later we’ll even build a videogame using this method in between our usual programming, who knows? I have something in mind aside from this…

Stay tuned!

As a reminder, you can find the code here:

Stylus Saturdays is brought to you by… the Arbitrum DAO! With a grant from the Fund the Stylus Sprint program. You can learn more about Arbitrum grants here: https://arbitrum.foundation/grants

Follow me on X: @baygeeth and on Farcaster!

Side note: I develop Superposition, a defi-first chain that pays you to use it. You can check our ecosystem of dapps out at https://superposition.so!