Bucharest Proof of Work introduction, Superposition Passport first look, interview with Hamza from Enclave

29th March, 2025

Hello again! I’m excited to share in this update details of the Gas Redux Challenge at ETH Bucharest, and to feature Hamza from Enclave/Gnosis Guild. I’m also excited to share details of Superposition Passport, an on-chain solving dApp basis built using custom calldata with compression for ed25519 signatures and calldata. We’ll recap the Chainlink price feed example next time.

To recap: Stylus Saturdays is a (mostly) biweekly newsletter examining the Arbitrum Stylus ecosystem. Stylus is a WASM frontend to the EVM stack available on Arbitrum. Write your contracts in virtually any programming language, and deploy them with full composability with Solidity and Vyper smart contracts.

Introducing Bucharest PoW

In the final stage of the Stylus Saturdays Gas Redux ETH Bucharest challenge, contestants must submit gas efficient solutions for Bucharest PoW puzzles. Bucharest Proof of Work (PoW) is type of Proof of Work algorithm invented for our gas competition.

Refreshing everyone: what’s Proof of Work? Proof of Work in the traditional sense with Bitcoin, is the process of taking the hash of the block header (the combined transactions with the fee receiving address and more set), then hashing with a randomly chosen nonce, and repeating this process until discovering the amount of zeroes in the hash string for the difficulty level. This type of algorithm is known as Hashcash.

There are other Proof of Work algorithms, some “memory latency bound” like Cuckoo Cycle Proof of Work, which is used in the blockchains Grin and Mimblewimble. This Proof of Work algorithm is limited by memory latency, since it’s solution is dependent on traversing large sparse blocks of memory for finding a graph of a specific combination.

Cuckoo Cycle work is the process of taking the block header hash, then combining it with a nonce (a number) which is sequentially chosen, and using a hashing function called a keyed hashing function. From the results of the keyed hash function, a pair of edges are created in a bipartite graph. This algorithm repeats itself, until a cycle in the graph is found between the nonces given of a specific length chosen for the difficulty. This type of algorithm is possible to parallelise, but not at the cost of contention, which causes memory latency to be the main bottleneck.

Keyed hash functions are a type of hash function where instead of taking a single argument then taking a preimage (like SHA256 or Keccak256/SHA3), instead a second argument is supplied with a randomly chosen seed. The digest the keyed hashing function generates is instead created with the seed and the hashing argument. An example of this in the Cuckoo Cycle context is Siphash. Imagine that instead of database hashing in the traditional sense (having a project wide “salt”), each individual row has a seed, which is the argument to the hashing function. Siphash performs better for smaller arguments, hence its usecase. This is useful in a hashtable context, imagining that a routing table is built this way, and an attacker could try to abuse the hashing function to get packets routed the wrong way.

Cuckoo Cycle hashing is unique in the broader context for a few reasons: the main being the lack of substantial environmental impact like Hashcash. With SHA256 repeated hashing, CPUs run hot working hard to find the solution, and there is an incentive for large scale mining operations to spin up with a substantial energy impact to maximise the parallelism for solutions and to design better ASICs. Cuckoo Cycles are better for the environment by equalising the efficient opportunities for the problem. It’s not possible to spin up a superpowered dirty energy consuming farm that mines Cuckoo Cycles in its traditional form, this is due to memory latency being largely impossible to optimise for at scale, thanks to restrictions of the speed of light. In networking, throughput isn’t getting better. Our CAPACITY is, which might make people think that speed is, but this is due to the opportunities of a good connection being made more available. We’re not pushing this better thanks to problems involving the speed of light and the density of transistors, and Moore’s Law hasn’t been accurate for some time.

This isn’t to say that ASICs can’t be used with Cuckoo Cycles. Any kind of software solution could be lifted to an optimal hardware form, and with the future of LLVM IR fabbing bespoke chips for algorithms staring down the barrel at us, this is a future we should simply acknowledge and adapt to, as in our problem space it simply distorts the economics if we externalise the social impact.

You know who benefits from memory latency optimsations like a server cluster? Laptop workstations and phones. Eagle eyed readers of our silly little newsletter would be aware that Apple’s CPUs are lower on the clock rate, but still perform excellently from a user’s perspective. This is due to a mixture of optimisations in compilers and interpreters, and you might even notice that ARM itself even has a operation that Javascript interpreters use (among other things). Software developers push the hardware by tightening the distance between software and hardware, as more complex instructions do micro parallelism on the chip to solve problems faster, including the famous speculative execution bug of a few years ago (SPECTRE).

So with Cuckoo Cycle hashing, a small phone could go toe to toe with a ASIC farm, unlike a Hashcash “dirty energy” farm operating out of Mongolia hacking away with a large-scale Hydraulic energy plant. Until we solve foundational physics problems, ASICs are not a genre-defining class with this type of algorithm.

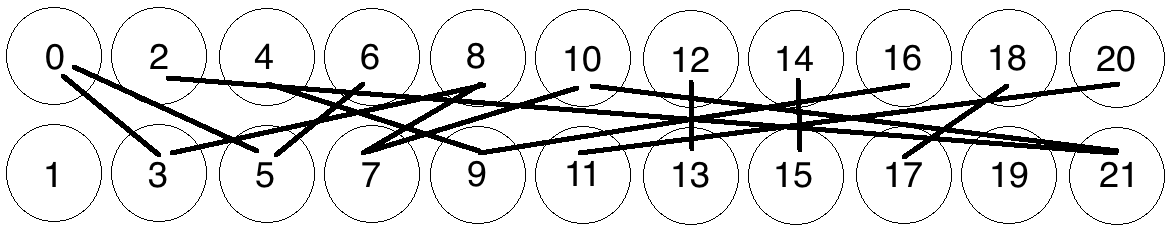

In Bucharest PoW, like Cuckoo Cycles, discoverers discover a range of nonce numbers to combine with a block header to prove they discovered the solution to produce the next block. Unlike Cuckoo Cycles, nonces are used to derive a board position for a large chess board, and a piece type. The nonce is increased until the first check is discovered of the latest placed king.

A proving function then retrospectively examines the nonces until it finds the earliest nonce used to place a piece on the board that affected the king’s checkmate. Like the Cuckoo Cycle, where a proof is simply the lowest and highest nonce that was used to construct the graph, the lowest nonce is chosen for the first piece that placed the king into check, and the highest piece. This is then transmitted as proof of finding the solution.

In our game at ETH Bucharest, submitters strive to find the most gas efficient solution for ETH Bucharest Proof of Work. There should be plenty of opportunities for optimisations with board representation, and the proving function! A submitter registers their contract with the scoring contract, which then causes an off-chain worker to randomly create several hashes, which it then simulates the contract with at the node. The median gas performance is chosen and then the transaction is committed on-chain.

Registrations to the scoring contract must be submitted with a link to a Git repository, and the hash of the revision of the repo. The repository must be made available to other participants. The code will be inspected by the judging team to disqualify bad participants. The most optimal solution at the time receives “tenancy” of the yield generated on a curve:

So, your winning solution will earn you a certain amount of revenue per second, which someone could potentially take from you by looking at your submission, identifying an optimisation, then resubmitting! The contract won’t accept revisions except those that are 5% more effective than the past submission. The submitter with the most points at the end of the game is the winner!

One of Stylus’ strengths lies in the large pages of memory you could be allocating and using relatively cheaply. I’m excited to see how people perform with this problem!

Introducing Superposition Passport

Superposition Passport is Superposition’s smart account for on-chain solving of off-chain matched trades with Longtail Pro. Superposition Passport is unique in that it’s a fully non-custodial matching and solving engine: unlike competing DEXes where amounts must be on-ramped or deposited to be used, Superposition Passport enables traders to simply sign an approval message to a smart account, which has its trades signed in the browser using ed25519 blobs generated with WebCrypto. From a user’s perspective, they simply sign once, and they can trade without any popups or notifications just like a CEX, but their money never left their wallet.

Superposition Passport is also unique in its calldata and on-chain compression: Borsh is leveraged with generated deserialisation code. Borsh is notable for its use in Solana and NEAR as the default encoding format. Borsh is a low overhead encoding format, and if used with little endian encoded blobs, massively reduces the visible size of calldata. EVM-compatible nodes price calldata with discounts for recurring zeroes, which muddles the optimisation advantages of using custom encoding formats. Owing to cheaper computation gas with Stylus (this is titled ink), we can decompress calldata generated with LZSS, a cheap encoding scheme however, and we can benefit from some gas savings. This is useful if we must aggregate signatures (which we do). Superposition Passport also facilitates backstopping liquidity with smart contracts, perhaps useful for stubbing out missing liquidity with a AMM. This compression approach is useful for optimising the calldata that includes the request leaving to the contract.

The most optimal EVM compression is often simply interpreting a bespoke stack machine with a custom calldata package. With a Borsh-heavy approach, we could do something similar, by having a recursive datatype that’s matched easily.

This code uses lzss to decompress a Borsh-encoded structure (Op below), then it matches on the enumerator type that was used. Following this, it does dispatch to the function we want. The storage type used implements storage fully. Following this, the contract returns the error type if things happened correctly. Despite the matching, we retain some EVM composability by having a error type we created using Solidity, which is encoded to something Ethereum tooling would know how to work with.

It’s very early days here with this contract, but we’re excited to share it with the community MIT licensed (like usual), and to integrate it directly with 9lives and the upcoming Superposition Pro release.

Interview with Hamza from Enclave/Gnosis Guild

I’m super lucky to have had the chance to feature a seasoned cryptographer like Hamza!

Who are you, and what do you do?

I'm Hamza Khalid, an Applied Cryptographer and Protocol Engineer at Gnosis Guild, the initial development team building Enclave. I got into web3 pretty early on when I used to lurk around Bitcoin forums since I was 14. I used to offer web dev services in exchange for bitcoin at the time, as you can imagine, since as a 14-year-old I did not have access to a bank account, so bitcoin was a game-changer for me. That early experience not only introduced me to the power of decentralized money but also set me on the path toward exploring cryptography, distributed systems, and the broader implications of web3.

Now I work on Enclave, an open-source protocol that makes secure, encrypted computation possible using fully homomorphic encryption (FHE), zero-knowledge proofs (ZKPs), and distributed threshold cryptography (DTC). It’s a mouthful, but basically, we’re making it possible to compute on private data without ever decrypting it, leveraging Encrypted Execution Environments (E3s) for verifiable, privacy-preserving computation.

I've been all over the tech map, from full-stack web development/devops/cybersecurity to cryptography, from AI to blockchain development across multiple ecosystems. I've built cross-chain bridges, NFT platforms, written ZKP circuits for identity verification, and developed federated learning ML models with FHE on the blockchain.

What web3 ecosystems have you worked in?

Before working at Gnosis Guild, I was a web3 lead at permission.io and the lead blockchain developer at 0xAuth (now David.inc). I've worked across a bunch of different chains. I started with Solana and got really into Rust-based smart contracts there. After that, I picked up Ethereum, which honestly took me a while to get used to because my brain was so wired for how Solana worked.

Since then, I've worked with Polygon, Arbitrum, Base, and even some private blockchains. I've used Soroban (another Rust-based smart contract framework) to write smart contracts for the Stellar blockchain.

Working across these platforms has given me a well-rounded perspective on the strengths and trade-offs of each ecosystem, and how they can complement each other in the broader web3 landscape.

What are your impressions of working with Stylus?

At Gnosis Guild, we are working on integrating Stylus as a compute provider for Enclave. A compute provider is essentially a service that takes encrypted or raw input data, performs specific computations securely, and outputs verifiable results that can be trusted on-chain. Currently, our process relies on zkVMs; specifically, we perform all the heavy computations off-chain inside a zero-knowledge virtual machine (like Risc0). This method involves generating a zk proof that attests to the correctness of the computation before it’s finally verified on-chain.

Working with zkVMs has given us strong security guarantees, ensuring that even though computations are done off-chain, they are still verified rigorously on-chain. However, this approach comes with additional complexity and latency due to the extra steps required to generate and verify zk proofs, and they are pretty slow as well.

By contrast, Stylus introduces an on‑chain compute provider that operates using a WASM environment. This allows us to write our smart contracts in Rust while retaining full EVM interoperability. With Stylus, computations are executed directly on-chain, meaning that the verification is inherent to the consensus mechanism, simplifying the architecture and reducing overhead. So Stylus will not only boost performance and lower gas costs but also streamline development by eliminating the need for a separate zk proof step.

Overall, my impressions of working with Stylus are very positive. Having tried a bunch of smart contract languages in Rust, Stylus has been the most fun and easiest to work with by far.

My experience with Solana and Stellar smart contracts was quite different since they're not EVM chains; the whole development flow feels different. But with Stylus, it was really easy to get started immediately.

What software do you use?

I keep it really simple with Windows + WSL Ubuntu + VSCode/Cursor AI. I've tried setting up Neovim and used it for a bit, but I always end up going back to VSCode. Neovim is awesome, but the time it takes to set things up and fix stuff when it breaks just isn't worth it for me.

I also don't bother with custom Linux distros. I've watched my coworkers struggle with dependencies, updates, and broken kernels, and decided that's not for me. I love customizing things to make them look pretty, but I always end up reverting to plain old Windows for simplicity.

One of my absolute favorite tools on Windows is PowerToys; it lets me stick to my keyboard and avoid using the mousepad as much as possible.

I also play around with a ton of AI tools — Claude, Cursor, ChatGPT, Gemini, different architectures, RAG systems, you name it. I love jailbreaking LLMs and getting them to do stuff they're not supposed to do, probably because I've always been into CTFs and see it as a fun challenge.

What hardware do you use?

My daily driver is an ASUS Strix G17 with 32GB RAM and an RTX 3070TI. It's a "Gaming Laptop," but my main use for it is to train ML models and handle Rust compilations and cryptographic experiments without melting down.

I also experiment a lot with hardware, like Arduino and ESP32 microcontrollers, courtesy of my work in embedded systems programming in Rust.

I’m also into gaming, and I’ve put more hours into Dota 2 than I’d like to admit, though these days, I'm gravitating towards single-player games like Prey, A Plague Tale, and revisiting classics like Max Payne. Multiplayer used to be my thing until I realized I preferred trolling teammates over actually winning — fun for me, maybe less so for them.

What's a piece of advice you'd give someone new to Web3?

If you’re new, it can feel overwhelming, this is a wide-open, rapidly evolving space. You’re stepping into a frontier where you can build and reimagine systems that traditional web platforms simply can’t support. Ask dumb questions, they’re often the smartest ones. Dive into communities, tinker with protocols, and embrace the cringe PFPs. Don’t be afraid to get your hands dirty; you’ll need to dive deep to uncover where your energy fits best. Experiment a lot, stay curious, stay skeptical, and remember to have fun.

How can we get in touch with you?

I’m always eager to connect with fellow developers, researchers, or anyone interested in the evolving world of web3 and secure computation. You can reach me via:

Email: me@hmzakhalid

GitHub: github.com/hmzakhalid

For Enclave, you can follow on*X and subscribe to the blog. For partnerships, business opportunities, and more, join the Enclave Telegram group.

Stylus Saturdays is brought to you by… Arbitrum! With a grant from the Fund the Stylus Sprint program. You can learn more about Arbitrum grants here: https://arbitrum.foundation/grants

Follow me on X: @baygeeth

Side note: I develop Superposition, a defi-first chain that pays you to use it. You can check our dapps out at https://superposition.so.